Making viruses in the lab deadlier and more able to spread: an accident waiting to happen

By Tatyana Novossiolova, Malcolm Dando | August 14, 2014

All rights come with limits and responsibilities. For example, US Supreme Court Justice Oliver Wendell Holmes famously noted that the right to free speech does not mean that one can falsely shout "fire" in a crowded theatre.

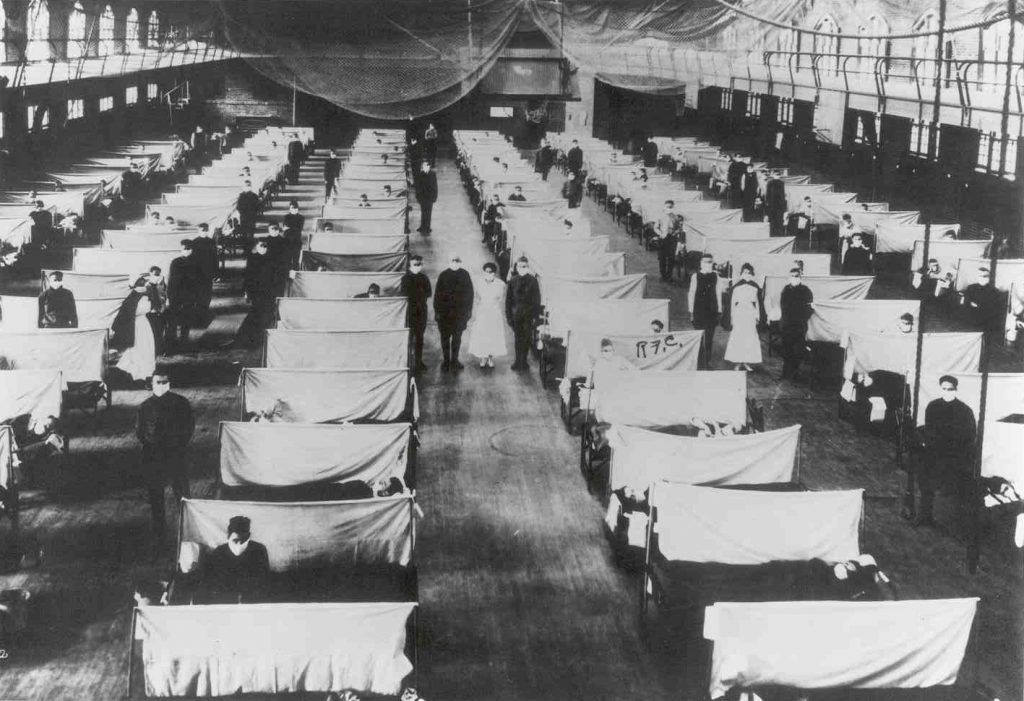

The same constraints and obligations apply to the right of scientific inquiry, a topic that has been in the news recently after researcher Yoshihiro Kawaoka of the University of Wisconsin-Madison published an article in the journal Cell Host and Microbe in June describing the construction of a new flu virus from wild-avian-flu strain genes that coded for proteins similar to those in the 1918 pandemic virus; the new virus was not only able to spread between ferrets—the best current model for human flu transmission—but was also more virulent that the original avian strains. (The 1918 Spanish Flu killed an estimated 50 million people; the molecular structure of the new strain is only three percent different than the 1918 version.)

Asked for comment by The Guardian newspaper, Robert, Lord May of Oxford, the former chief scientific advisor to the British Prime Minister and former president of the British Royal Society—one of the oldest and most prestigious scientific organizations in the world—condemned the work as "absolutely crazy," calling "labs of grossly ambitious people" a real source of danger.

As if that research were not enough to cause worry, in July a newspaper investigation asserted that Kawaoka was also conducting another controversial—but so far unpublished—study in which he genetically altered the 2009 strain of flu to enable it to evade immune responses, "effectively making the human population defenseless against re-emergence."

If true, it may be that Kawaoka has engineered a novel strain of influenza with the capability of generating a human pandemic, if it ever escaped from a laboratory. (“Pandemic” means that it occurs over a wide geographic area and affects an exceptionally high proportion of the population. In comparison, the Centers for Disease Control define an “epidemic” as merely “the occurrence of more cases of disease than expected in a given area or among a specific group of people over a particular period of time.”)

An independent risk-benefit assessment of this work conducted at the request of the journal Nature demonstrated that Kawaoka’s work did indeed meet four of the seven criteria outlined in the US Policy for Oversight on Dual Use Research of Concern (DURC) of March 29, 2012, meaning that the institution found that the research could be misused to threaten public health and would therefore require additional high-level safety measures, including a formal risk-mitigation plan.

But even with these measures in place, this research still seems like an unnecessary risk, given the danger that the bio-engineered viruses could turn into a pandemic threat, and that some experts think that there are far better and safer ways to unlock the mysteries of flu transmissibility. Claims that this work would help in the manufacture of a preventive vaccine have been strongly contradicted by Stanley Plotkin of the Center for HIV-AIDS Vaccine Immunology, among other critics.

Part of the justification behind conducting these experiments, apparently, was to develop a better understanding of the pandemic potential of influenza viruses by enhancing their properties, such as altering their host range, for example. Since the newly engineered viruses possess characteristics that their naturally found, or "wild," counterparts do not, this type of study is commonly referred to as "gain-of-function" research in virologists’ parlance.

But considering the likelihood of accidental or deliberate release of the virus created by gain-of-function experiments, the following issues should be considered before approving any such studies—and preferably they would have been taken into consideration by those attending the Biological and Toxin Weapons Convention earlier this month.

In a nutshell: The convention’s attendees should have agreed on a common understanding requiring that all gain-of-function experiments be stopped until an independent risk-benefit assessment is carried out; the scientific community should exhaust all alternative ways of obtaining the necessary information before approving gain-of-function experiments; biosecurity education and awareness-raising should be given a priority as tools for fostering a culture of responsibility in the life sciences; and there should be a modern version of the “Asilomar process” to identify the best approaches to achieving the global public health goals of defeating pandemic disease and assuring the highest level of safety. (At Asilomar, California, in the early 1970s, researchers studying recombinant DNA met to discuss whether there were risks from their research, what the negative social implications could be, and how to contain the dangers.)

There will be another meeting of the Biological and Toxin Weapons Convention in December; one can only hope that it will consider these proposals then.

What, me worry? Sometimes, the potential for accidents is inherent in a system, making their occurrence not only able to be anticipated but inevitable, even "normal." For example,Charles Perrow’s famous account of the Three Mile Island nuclear accident contends that the very structure and organization of nuclear power plants make them accident-prone. As a result, even in the presence of sophisticated safety designs and technical fixes, multiple and unexpected interactions of failures are still bound to occur, as illustrated more recently in the Fukushima disaster.

Gain-of-function research in the life sciences is another example of the inevitable failure of overly complex, human-designed systems with multiple variables. Some of the most dangerous biological agents—anthrax, smallpox, and bird flu—have been mishandled in laboratories. As noted by the newly formed Cambridge Working Group, of which one of us —Malcolm Dando—is a member, these are far from exceptional cases; in the U.S. alone, biosafety incidents involving regulated pathogens "have been occurring on average over twice a week."

Such situations are not confined to the United States; China’s poor track record for laboratory containment means that it was "appallingly irresponsible" (in Lord May’s words) for a team of Chinese scientists to create a hybrid viral strain between the H5N1 avian influenza virus and the H1N1 human flu virus that triggered a pandemic in 2009 and claimed several thousand lives. In a July 14, 2014 statement about the creation of such pathogens, the Cambridge Working Group noted:

An accidental infection with any pathogen is concerning. But accident risks with newly created “potential pandemic pathogens” raise grave new concerns. Laboratory creation of highly transmissible, novel strains of dangerous viruses, especially but not limited to influenza, poses substantially increased risks. An accidental infection in such a setting could trigger outbreaks that would be difficult or impossible to control.

Against this backdrop, the growing use of gain-of-function approaches for research requires more careful examination. And the potential consequences keep getting more catastrophic.

High-profile examples. In April, 2014, the Daniel Perez Lab at the University of Maryland engineered an ostrich virus known as H7N1 to become “droplet transmissible”—meaning that the tiny amounts of virus contained in the minuscule airborne water droplets of a sneeze or a cough would be enough to make someone catch the illness. Hence, it could be easily transmitted from one subject to another.

So far, there has not been one laboratory-confirmed case of human infection by H7N1. It is apparently not a threat to man, unlike H5N1 and H7N9.

However, while the chance of airborne transmission of H7N1 in humans by droplet is apparently low, the test animals that it did manage to infect became very ill indeed—60 percent of ferrets infected through the airborne route died. This is a phenomenal rate of lethality; in contrast, only about two percent of humans who contracted the illness died from it during the Spanish Flu pandemic of 1918.

So it was with concern that the scientific world noted Kawaoka’s study describing the construction of a brand-new flu virus from wild-avian-flu strain genes that coded for proteins similar to those in the 1918 pandemic virus. The resulting new pathogen was not only able to spread between ferrets—the best current animal model for human flu transmission—but it was also more severe in its effects than the original avian strain. But the story does not finish here. As an article in Nature revealed, the “controversial influenza study was run in accordance with new US biosecurity rules only after the US National Institute of Allergy and Infectious Diseases (NAID) disagreed with the university’s assessments,” thus showing the real need for reform of the current system.

Avoiding a ‘normal’ accident. While biotechnology promises tremendous public health benefits, it also holds a considerable potential for catastrophe, as these gain-of-function experiments illustrate. As scientific capabilities and work involving dangerous pathogens proliferate globally, so too do the risks and the prospects for failure—whether coming from technology or arising from human error. Indeed, in assessing the rapidly evolving life-science landscape, Jose-Luis Sagripanti of the US Army Edgewood Chemical Biological Center—the nation’s principal research and development resource for chemical and biological defenses—has argued that “current genetic engineering technology and the practices of the community that sustains it have definitively displaced the potential threat of biological warfare beyond the risks posed by naturally occurring epidemics.”

Laboratories, however well equipped, do not exist in isolation but are an integral part of a larger ecological system. As such, they are merely a buffer zone between the activities carried out inside and the greater environment beyond the laboratory door. Despite being technologically advanced and designed to ensure safety, this buffer zone is far from infallible. Indeed, as researchers from Harvard and Yale demonstrated earlier this year, there is almost a 20 percent chance of a laboratory-acquired infection occurring during gain-of-function work, even when performed under conditions of the highest and more rigorous levels of containment. Addressing the rapid expansion of gain-of-function studies is therefore both urgent and mandatory.

In December 2013, the Foundation for Vaccine Research sent a letter to the European Commission calling for a “rigorous, comprehensive risk-benefit assessment of gain-of-function research” which “could help determine whether the unique risks to human life posed by these sorts of experiments are balanced by unique public health benefits which could not be achieved by alternative, safe scientific approaches.” Given the recent developments with influenza viruses, there is a need for an independent assessment of the costs and benefits of gain-of-function research. Such independent review would allow for adopting newer and better regulations and conventions, as well as help to identify policy gaps. As David Relman of the Stanford School of Medicine recently pointed out in the Journal of Infectious Diseases, the time has come for a balanced and dispassionate discussion that “must include difficult questions, such as whether there are experiments that should not be undertaken because of disproportionately high risk.”

Correction: Due to an editing error, the second paragraph of this article initially contained an erroneous description of research published in June in the journal Cell Host and Microbe. The Bulletin regrets the error.

Together, we make the world safer.

The Bulletin elevates expert voices above the noise. But as an independent nonprofit organization, our operations depend on the support of readers like you. Help us continue to deliver quality journalism that holds leaders accountable. Your support of our work at any level is important. In return, we promise our coverage will be understandable, influential, vigilant, solution-oriented, and fair-minded. Together we can make a difference.

Topics: Analysis, Biosecurity, Special Topics